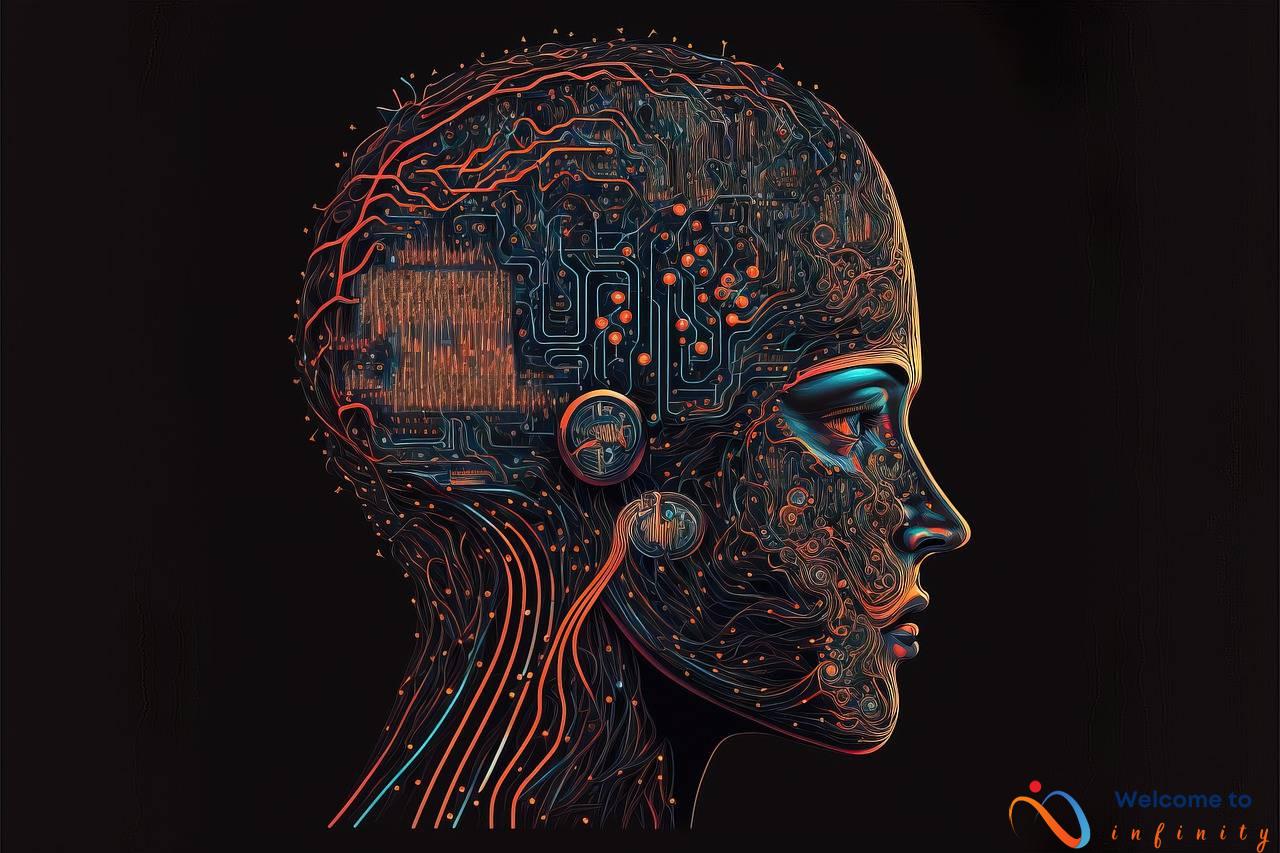

The field of computer vision and image recognition has seen remarkable growth and progress in recent years. The use of advanced algorithms and machine learning techniques has led to exciting developments in image processing, pattern recognition, and object detection. In this article, we will provide an overview of the latest advancements in this fascinating field.

One of the most powerful techniques for image recognition is the Convolutional Neural Network (CNN). CNNs have proven to be effective in identifying and classifying objects in images and videos. They have been used for a variety of applications, including facial recognition, self-driving cars, and medical imaging. In recent years, there have been significant developments in CNN architectures, resulting in faster and more accurate models.

Object detection and tracking is another area where computer vision and image recognition technologies have made significant strides. There are now numerous algorithms available for detecting and tracking objects in real-world images and videos. You Only Look Once (YOLOv5) is the latest version of the popular real-time object detection algorithm that can identify objects in near real-time.

Generative models, such as Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs), have also created exciting possibilities in image processing. They can be used to create realistic images, transfer the style of one image to another, and generate images that do not exist in the real world.

These advancements in computer vision and image recognition have significant practical applications. Medical imaging is one area where computer vision tech is used to assist diagnosis or treatment. Autonomous vehicles are another area, where advanced image recognition technologies play an essential role in developing safe and reliable self-driving cars. However, it's important to consider the ethical implications of using image recognition technologies for surveillance and security.

Convolutional Neural Networks (CNN)

Convolutional Neural Networks or CNNs are a type of Deep Learning technique that has gained a lot of popularity in recent years for its impressive performance in image recognition tasks. CNNs are modeled after the structure and function of the human visual cortex, which makes them particularly effective for analyzing visual imagery.

The key to the success of CNNs is their ability to automatically learn features and patterns that are important for image recognition tasks. This is achieved through a process called convolution, where a filter is moved across an image to extract high-level features such as edges, corners, and textures. Different filters can detect different types of features, and multiple layers of filters allow the network to learn increasingly complex features as it processes the image.

Recent developments in CNN architectures have led to significant improvements in image recognition accuracy. For example, the use of residual connections in ResNet has allowed the network to overcome the problem of vanishing gradients, which improves training efficiency and network depth. Another breakthrough is the development of EfficientNet, which achieves state-of-the-art accuracy while using fewer parameters and less computational resources than previous models.

- ResNet: A deep convolutional neural network with residual connections, which allows the network to learn from the residual functions instead of directly learning the underlying mapping.

- EfficientNet: A scalable and efficient CNN architecture that achieves state-of-the-art performance on image classification tasks using fewer parameters and less computational resources.

CNNs have many practical applications, including image recognition, object detection, and image segmentation. They are also used in fields such as medical imaging, where they can help doctors diagnose diseases and develop treatment plans. As CNNs continue to improve, it's likely that we'll see even more innovative applications and use cases in the future.

Object Detection and Tracking

Object detection and tracking are two of the most widely studied applications of computer vision and image recognition. Detecting objects in real-world images and videos is a challenging task that requires algorithms to be able to identify objects of various shapes, sizes, and colors despite complex backgrounds and lighting conditions.

Several advanced algorithms have been developed in recent years to tackle this problem, including the You Only Look Once (YOLO) algorithm and the Single Shot MultiBox Detector (SSD). These algorithms use convolutional neural networks (CNNs) to classify objects and locate them in the image.

To achieve real-time performance, these algorithms often use a feature extraction step followed by a classification and localization step. For example, YOLOv5 improves upon its predecessor by using a more efficient backbone architecture and augmenting the training data with a larger and more diverse dataset.

Tracking objects over time is an equally challenging problem, especially in crowded scenes where multiple objects may occlude each other. One popular approach for multi-object tracking is the Simple Online and Realtime Tracking (SORT) algorithm, which combines a Kalman filter for predicting the object's trajectory with a Hungarian algorithm for assigning objects to tracks.

DeepSORT is an extension of SORT that uses a deep appearance descriptor to differentiate between objects with similar motion patterns. This allows for more robust tracking in complex real-world scenarios where objects may move erratically or disappear from view.

Overall, object detection and tracking are rapidly advancing areas of computer vision research with numerous practical applications in fields such as autonomous vehicles, security, and surveillance. As new algorithms and architectures continue to be developed, the possibilities for these technologies are only limited by our imagination.

YOLOv5

YOLO (You Only Look Once) is a real-time object detection algorithm that has become increasingly popular in recent years. It was developed by Joseph Redmon and his team at the University of Washington, and it revolutionized object detection by providing near real-time detection and classification of objects in images and video.

The latest version of YOLO, YOLOv5, was released in 2020 and has been met with a lot of excitement from the computer vision community. It boasts improved accuracy and speed compared to previous versions, making it an even more powerful tool for real-time object detection and classification.

YOLOv5 uses a deep neural network to analyze images and video frames and identify objects within them. The algorithm is designed to be highly efficient, with a single forward pass through the network able to detect multiple objects in a single image or video frame. This makes it particularly useful for applications that require fast and accurate object detection, such as autonomous vehicles and security systems.

One of the key features of YOLOv5 is its ease of use and flexibility. It can be trained on a wide range of datasets, from generic object detection to more specific applications such as traffic sign detection. The algorithm can also be fine-tuned to detect the objects of interest for a particular application.

Overall, YOLOv5 represents the latest and greatest in real-time object detection technology. Its speed and accuracy make it a valuable tool for a wide range of applications, and its flexibility and ease of use make it accessible to developers and researchers of all levels of expertise.

MOT

Multi-object tracking (MOT) is an active research area in computer vision, which aims to track multiple objects over time in a video sequence. The applications of MOT span across various domains, including surveillance, robotics, and autonomous vehicles. Recent advancements in deep learning have led to significant progress in MOT, making it a highly active research area.

One of the most popular approaches to MOT is the “tracking by detection” paradigm, which involves detecting objects in individual frames and associating the detections across frames to form tracks. SORT (Simple Online and Realtime Tracking) and DeepSORT (Deep Learning-based SORT) are two popular algorithms that follow this paradigm. SORT is a lightweight algorithm that achieves real-time performance on standard CPUs, while DeepSORT uses a re-identification network to improve the association accuracy.

Another approach to MOT is the “end-to-end” paradigm, which involves directly optimizing for the tracking performance instead of using a separate detector and tracker. DeepSORT-Tracktor is a recent algorithm that follows this paradigm. It uses a Joint Detection and Embedding (JDE) network, which jointly learns detection and re-identification embeddings, to track objects in videos.

In addition to these approaches, there have been several recent developments in improving the robustness of MOT algorithms in challenging scenarios, such as occlusions and crowded scenes. For example, Graph Convolutional Networks (GCNs) have been used to model the spatial dependencies between objects and improve the association accuracy in crowded scenes.

Overall, MOT is a highly active research area in computer vision, with several recent advancements and developments. While the current algorithms have shown promising results in various applications, there are still many open challenges in making MOT more robust and accurate, especially in real-world scenarios.

Generative Models and Style Transfer

Generative models are a class of unsupervised machine learning algorithms that can learn to generate new data that resembles a distribution of training data. One of the most popular generative models is the Generative Adversarial Network (GAN), which involves a neural network that learns to generate samples that are indistinguishable from real data. Variational Autoencoders (VAEs) are another class of generative models that can be trained to generate new data. These techniques can be used to create highly realistic images that can be used in various applications such as creating synthetic data for training other models or generating new content.

Style transfer is another practical application of generative models. It is the process of changing the style of an input image to make it resemble the style of another image while retaining its content. This process involves defining and extracting the content and style features of two images, which are then used to create a new image that combines the content of one image and the style of the other.

The latest advancements in generative models include the newest version of the StyleGAN algorithm, StyleGAN2, which is known for its ability to produce high-quality synthetic images that are nearly indistinguishable from real photographs. Recently, there have been many developments in neural style transfer, including techniques that can transfer the style of a specific artist's paintings to other images or videos.

Overall, the use of generative models and style transfer techniques has opened up new possibilities for image creation and manipulation, and these advances are sure to continue as the field of computer vision and image recognition evolves.

StyleGAN2

StyleGAN2 is the latest iteration of the popular StyleGAN technique, which is used to create highly realistic synthetic images. This new version uses a variety of advanced features, including progressive growing, adaptive instance normalization, and style mixing regularization, to produce even more realistic images that are almost impossible to distinguish from real photographs.

One of the key features of StyleGAN2 is its ability to produce images with a high level of diversity. Unlike earlier generative models, which tended to produce images with a similar style or appearance, StyleGAN2 is capable of generating an almost infinite variety of images, each with its own unique style and characteristics. This has made it an incredibly popular tool for a wide range of applications, from art and entertainment to marketing and advertising.

- Progressive Growing: This technique involves starting with low-resolution images and gradually increasing the resolution over time, which allows for a smoother and more stable training process.

- Adaptive Instance Normalization: This method involves adjusting the normalization parameters of the neural network in real-time, based on the features of the input image. This helps to improve the overall quality and realism of the resulting images.

- Style Mixing Regularization: This technique involves combining the style vectors from multiple images in the training set, in order to produce images that combine the features and styles of multiple sources.

StyleGAN2 has been used in a variety of applications, from the creation of photorealistic artwork to the generation of synthetic images for use in film and television. It has also been used for medical imaging and other scientific applications, where the ability to generate accurate and realistic images is crucial.

Overall, StyleGAN2 represents a major breakthrough in the field of computer vision and image recognition, and is likely to have a significant impact on a wide range of industries and applications in the years to come.

Neural Style Transfer

Neural Style Transfer (NST) is a technique used to apply the style of one image onto another. In other words, it can be used to generate new images that have the content of one image and the style of another image. This technique is achieved using convolutional neural networks (CNNs) and optimization algorithms.

The process starts by defining a content image and a style image. The content image is the image whose content we want to preserve, while the style image is the image whose stylistic features we want to incorporate into the new image. The CNN is then trained to recognize the content in the content image and the style features in the style image.

Once the CNN is trained, it can be used to generate the final image by optimizing the pixel values of an initially random image. This optimization process is done by minimizing the difference between the CNN feature maps of the generated image and those of the content image, while also minimizing the difference between the feature maps of the generated image and those of the style image.

Recent advancements in this field include the use of adaptive instance normalization (AdaIN), which allows for better control over the style transfer process and the ability to control the degree of stylization. Other advancements include the use of multiple styles, which allows for the incorporation of multiple styles into a single image, and the use of deep feature losses, which improves the quality and fidelity of the generated images.

Overall, NST has many exciting applications in the fields of art, design, and entertainment, and has the potential to revolutionize the way we think about image creation and manipulation.

Applications and Future Directions

Computer vision and image recognition technologies are rapidly advancing, and their practical applications are becoming increasingly widespread. One application of these technologies is in the field of medical imaging, where they are being used to improve patient outcomes and assist healthcare providers in diagnosing and treating diseases.

Another practical application of computer vision and image recognition is in the development of autonomous vehicles. These technologies are critical in enabling self-driving cars to navigate their surroundings and make intelligent decisions in real-time. However, there are still significant challenges that researchers must address, such as ensuring the safety and reliability of autonomous vehicles in complex driving scenarios.

Surveillance and security is another area in which computer vision and image recognition technologies are being used. From facial recognition to object detection and tracking, these technologies are enabling improved security and monitoring of public areas and critical infrastructure. However, there are also ethical considerations that must be taken into account when implementing these technologies, such as ensuring that individuals' privacy rights are respected.

Looking towards the future, there are many exciting research directions and possibilities for computer vision and image recognition technologies. One area of focus is in developing more advanced generative models, which can create increasingly realistic and complex images. Another area of interest is in developing more sophisticated algorithms for object detection and multi-object tracking, which will be critical for enabling autonomous vehicles and improving surveillance and security.

In conclusion, computer vision and image recognition technologies have already had a significant impact on many areas of our lives, and their potential applications are only increasing. As researchers continue to make advancements in these fields, we can expect to see even greater benefits in the years to come.

Medical Imaging

Medical imaging is one of the most critical areas where computer vision and image recognition technologies are being used extensively. These tools have the potential to revolutionize the way we diagnose and treat a wide range of medical conditions. The use of these technologies is not limited to any particular medical specialty; instead, it has found applications in almost all fields of medicine, including radiology, cardiology, pathology, and neurology.

X-ray analysis is one of the most common applications of computer vision and image recognition in medical imaging. By analyzing X-ray images, AI algorithms can identify and classify different structures of the human body, such as bones, organs, and tissues. This technology has enormous potential to improve the accuracy and efficiency of medical diagnoses, especially in emergency situations. Additionally, computer vision technologies can be used to detect abnormalities in mammograms and other imaging tests used in cancer screening, which can lead to early detection and better treatment outcomes.

In addition to X-ray analysis, computer vision and image recognition technologies are also being used in diagnostic imaging. For instance, AI algorithms can interpret magnetic resonance imaging (MRI) and computed tomography (CT) scans to detect and diagnose a wide range of medical conditions, including cancer, heart disease, and neurological disorders. These algorithms can analyze vast amounts of medical images and highlight areas of concern that might otherwise be missed by human radiologists and physicians.

Overall, the use of computer vision and image recognition technologies in medical imaging is still in its early stages, but it has enormous potential to improve patient outcomes and revolutionize the field of medicine. As more research is conducted in this area, we can expect to see even more innovative applications of AI in medical imaging that can help us diagnose and treat a wide range of medical conditions more effectively and efficiently.

Autonomous Vehicles

Autonomous vehicles are no longer just a thing of the future. They are already on the roads and are becoming increasingly popular. Computer vision has played a critical role in the development of these vehicles, enabling them to navigate through traffic, detect obstacles, and make decisions about how to proceed.

One of the main challenges that remains to be addressed is the reliability of these systems. While autonomous vehicles have come a long way in recent years, there is still a significant risk of accidents due to programming errors or malfunctioning hardware. The potential for cybersecurity threats is also a major concern.

Another issue that must be addressed is the legal and regulatory framework for autonomous vehicles. This technology raises a host of thorny legal and ethical questions, such as who will be held liable in the event of an accident, and how much control should be given to the human driver in case of an emergency.

Despite these challenges, the potential benefits of autonomous vehicles are enormous. They are expected to reduce traffic congestion, lower the number of accidents, and improve overall transportation efficiency. As computer vision and image recognition technologies continue to improve, we can expect to see even greater advancements in autonomous vehicle development in the years to come.

Surveillance and Security

Image recognition technologies are being increasingly utilized for surveillance and security purposes, including facial recognition, object recognition, and license plate recognition. While these technologies can provide valuable assistance in identifying potential threats and enhancing public safety, their use also raises significant ethical concerns.

One of the primary concerns regarding image recognition for surveillance is the potential for abuse and violation of privacy. Facial recognition, in particular, has come under scrutiny for its tendency to misidentify individuals and its potential to be used for discriminatory purposes. There are also concerns about the use of these technologies for mass surveillance, which can lead to an erosion of civil liberties and have a chilling effect on free speech and other fundamental rights.

Another consideration when using image recognition technologies for surveillance and security is the risk of bias and injustice. These technologies can perpetuate existing societal biases and deepen inequalities, particularly if they are used to target specific populations or neighborhoods. In addition, the lack of transparency and accountability in the development and implementation of these technologies can make it difficult to identify and correct any biases or errors that may arise.

Despite these challenges, many organizations and governments continue to invest in image recognition technologies for surveillance and security purposes. To address these concerns, it is essential to establish clear regulations and guidelines for the ethical use of these technologies. This includes addressing issues such as bias, privacy, and accountability, and ensuring that these technologies are used in a manner that is fair, transparent, and respectful of individual rights.

In conclusion, while image recognition technologies can provide valuable assistance for surveillance and security purposes, their use must be accompanied by a robust framework of ethical considerations and accountability. By carefully considering the potential risks and benefits of these technologies, we can work towards a more just and secure society.